CI Calibration

Tolerance[edit]

The tolerance in the CI calibration window gives you more control over the calibration. Let say you want to calibrate 10 mA (20 dbmA), with a tolerance of 0,5 dB This would mean that all generated levels between 10 mA and 10,59254 mA are accepted as correct values. The first thing you may wonder, why no fill in 0dB? This is possible, but will generate an large amount of warning because the desired levels can not be reached. 0 dB tolerance is only possible in an ideal world, and unfortunately our measuring equipment is not ideal. So what is the correct setting? this is depending on your needs, it is always a trade off between measuring speed and accuracy. When the desired level has been reached, this level is stored inside the calibration file. Meaning, if 10 watts is generating 11 mA, then 11 mA is stored together with the 10 watts. But if I do a substitution test? will it reproduce the 11 mA again if I set it to 10 mA? The answer is no, RadiMation will calculate power needed to achieve 10 mA and level to this. So in theory you can calibrate on 1 mA and perform testing at 100 mA, in practice you calibrate on 2 or 3 levels. for example 10 and 100 mA.

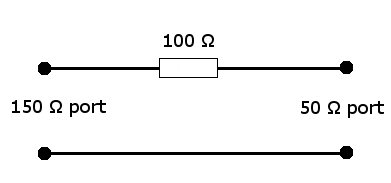

Insertion loss of the 150 to 50 Ω adapter[edit]

The loss between the voltage on the 150 Ω port to the 50 Ω port is 9,5 dB.

How do you calculate this?

The easiest way is by example.

We assume that the voltage on the 150 Ω port is 1 V, we chose this because this is 0 dBV and makes it easy to calculate.

The voltage over the 50 Ω port is 1 / 3 of the voltage of the 150 Ω port. in formula:

And 1/3 V is about -9,5 dBV

in formula:

U_{50 \Omega} = 20*log10(1/3) \approx -9,542425094 \ V